In this demo I will show how to

provision PDB on RedHat Linux from a non-CDB 11gr2 on Solaris.

You maybe ask what the reason is for

moving to RedHat from Solaris. Well the answer is that the move is not based

on a platform reason it is based on moving to 12c without impacting the current

development environment.

Development team 1 has been on 12c PDB

database DEV1DB for the last week and is loving the new database features.

Development team 1 went and told Development team 2 about their new cool

database features. The manager for development came and asked to get team 2 on

12c but does not want to impact the current development environment on 11gr2

Solaris. The manager also requested not to share the 12c in the same

environment as team 1.

I began to investigate what would be

the best option for completing this in the least amount of time.

I have database DBTEST1 11.2.0.3 on

Solaris and I want to create a new PDB called DEV2DB and transport the

tablespace and import data from DBTEST1.

Here are the steps I completed to

give development team 2 their own PDB 12c database inside container database TESTDBS.

Source Database:

dbtest1 on Solaris 64bit

Target Database:

Pluggable database dev2db on RedHat Linux 64bit

Steps:

1. Checks

- The source database version needs to be minimum of 11.2.0.3+.

- The source database needs minimum compatible parameter set to 11.2.0.3.0.

- The source database and PDB database character should match.

- Set you environment to your source database on source server.

2. Create directory on source server to hold dmp and rman files.

$mkdir -p /orabkup/dbtest1/dpdump

3. Create Oracle directory on source database for export job.

SQL>create directory "EXP_DATA_PUMP_DIR" as '/orabkup/dbtest1/dpdump/';

4. On source database place the users and non oracle tablespaces in read only mode.

SQL> select tablespace_name from dba_tablespaces;

In my case it is just the USERS and SOE tablespace

SQL> alter tablespace USERS read only;

SQL> alter tablespace SOE read only;

5. Execute full export from source system with version set to 12 and transportable set to always

$expdp \'sys/temp1234 AS SYSDBA\' full=y job_name=EXPORT_DEV2DB dumpfile=datapump_dev2db.dmp DIRECTORY=EXP_DATA_PUMP_DIR LOGFILE=export_datapump_dev2db.log VERSION=12 transportable=always

Once the export is complete note down the required datafiles for transportable tablespace.

6. Execute RMAN on source system to backup the datafiles needed for transportable tablespaces.

$rman target /

run

{

CONVERT DATAFILE '+DATADG/dbtest1/datafile/soe.262.814263143' DB_FILE_NAME_CONVERT="+DATADG/dbtest1/datafile/soe.262.814263143","/orabkup/dbtest1/dpdump/soe.262.814263143" FORMAT='/orabkup/dbtest1/dpdump/soe.262.814263143';

CONVERT DATAFILE '+DATADG/dbtest1/datafile/users.260.814258995' DB_FILE_NAME_CONVERT="+DATADG/dbtest1/datafile/users.260.814258995","/orabkup/dbtest1/dpdump/users.260.814258995" FORMAT='/orabkup/dbtest1/dpdump/users.260.814258995';

}

7. On source database place tablespaces back into read write mode.

SQL> alter tablespace USERS read write;

SQL> alter tablespace SOE read write;

8. Create directory on target server

$mkdir -p /orabkup/dbtest1

9. Transfer files to target server from source server.

$scp -r dpdump oracle@alpddbs002:/orabkup/dbtest1/

Files now on target system

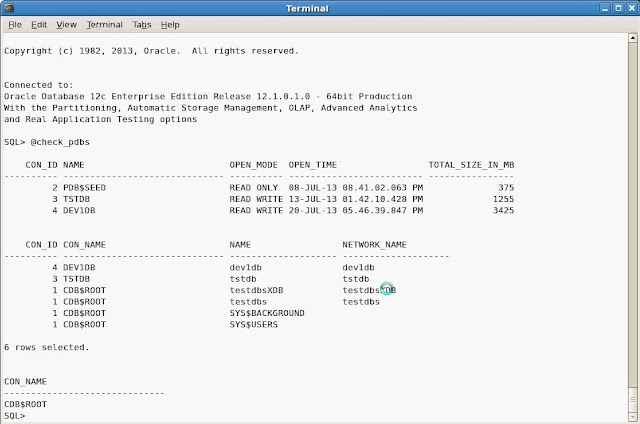

10. On target system connect to container (CDB) database and check current PDBs.

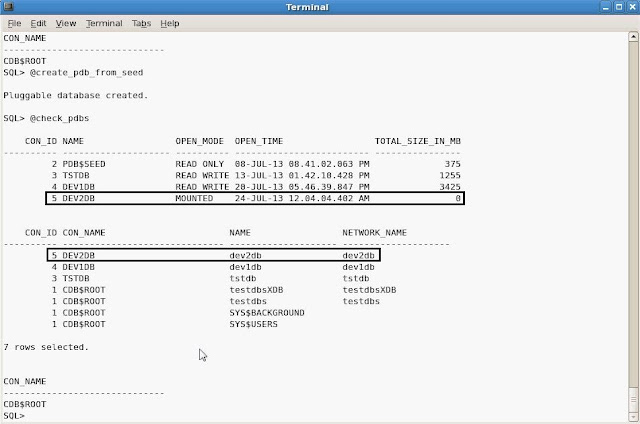

11. On target system connect to container (CDB) database and create the new pluggable (PDB) database.

Since we are going to plug the default tablespace from the source system I will not create the PDB with a default tablespace.

SQL>CREATE PLUGGABLE DATABASE dev2db

ADMIN USER pdbadm IDENTIFIED BY temp1234

ROLES = (connect);

- Observation of the alert log during and after creation of new PDB dev2db

12. Check PDBs after the newly created PDB. The new PDB dev2db is created with con_id 5 but in mounted mode.

13. Open new PDB dev2db

- Now the dev2db PDB is in read write mode.

- Review datafiles in CDB for all PDBs

- Review tablespaces for all PDBs

14. Create ASM directory to storage datafiles

$asmcmd -p

ASMCMD>cd dg01

ASMCMD>mkdir DEV2DB

15. Use RMAN to restore datafiles from source system. I am setting a temporary alias to the datafiles that I will rename in the next step.

$rman

RMAN>CONNECT TARGET sys/temp1234@(DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=alpddbs002)(PORT=1521))(CONNECT_DATA=(SID=testdbs)))

RMAN>run

{

CONVERT DATAFILE '/orabkup/dbtest1/dpdump/soe.262.814263143' DB_FILE_NAME_CONVERT="/orabkup/dbtest1/dpdump/soe.262.814263143","+DG01/dev2db/soe_data_1" FORMAT='+DG01/dev2db/soe_data_1';

CONVERT DATAFILE '/orabkup/dbtest1/dpdump/users.260.814258995' DB_FILE_NAME_CONVERT="/orabkup/dbtest1/dpdump/users.260.814258995","+DG01/dev2db/users_data_1" FORMAT='+DG01/dev2db/users_data_1';

}

{

CONVERT DATAFILE '/orabkup/dbtest1/dpdump/soe.262.814263143' DB_FILE_NAME_CONVERT="/orabkup/dbtest1/dpdump/soe.262.814263143","+DG01/dev2db/soe_data_1" FORMAT='+DG01/dev2db/soe_data_1';

CONVERT DATAFILE '/orabkup/dbtest1/dpdump/users.260.814258995' DB_FILE_NAME_CONVERT="/orabkup/dbtest1/dpdump/users.260.814258995","+DG01/dev2db/users_data_1" FORMAT='+DG01/dev2db/users_data_1';

}

16. Connect to PDB to check if SOE user exist as we know it will not. I use the full connection string for this demo but you can add the entry into the Oracle home and connect just with the identifier.

$sqlplus /nolog

SQL>connect sys/temp1234@"(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = alpddbs002)(PORT = 1521))(CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = dev2db)(INSTANCE_NAME=testdbs)))" AS SYSDBA

17. Create Oracle directory in PDB database.

$sqlplus /nolog

SQL>CREATE DIRECTORY "IMP_DATA_PUMP_DIR" AS '/orabkup/dbtest1/dpdump/';

18. Execute import proccess

Note: I did a remap of the tablespace SOE to SOE_DATA to confirm to my tablespace naming.

$/orabase/product/12.1.0/db_1/bin/impdp \'sys/temp1234@"(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = alpddbs002)(PORT = 1521))(CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = dev2db)(INSTANCE_NAME=testdbs)))" AS SYSDBA\' full=y dumpfile=datapump_dev2db.dmp DIRECTORY=IMP_DATA_PUMP_DIR LOGFILE=import_datapump_dev2db.log VERSION=12 TRANSPORT_DATAFILES=+DG01/dev2db/soe_data_1,+DG01/dev2db/users_data_1 job_name=imp_dev2db parallel=2 REMAP_TABLESPACE='SOE':'SOE_DATA'

- First observation of the alert log shows when the datapump job started the tablespace SOE_DATA and USERS was plugged into PDB DEV2DB.

- Second observation of the alert log shows when the datapump job completed the tablespace SOE_DATA and USERS what alerted to read write.

19. Connect to PDB to check if SOE user exist now after the import. I use the full connection string for this demo but you can add the entry into the Oracle home and connect just with the identifier.

$sqlplus /nolog

SQL>connect sys/temp1234@"(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = alpddbs002)(PORT = 1521))(CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = dev2db)(INSTANCE_NAME=testdbs)))" AS SYSDBA

- We now see that the SOE schema exist as well as an application user account.

20. Check tablespaces at PDB level.

$sqlplus /nolog

SQL>connect sys/temp1234@"(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = alpddbs002)(PORT = 1521))(CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = dev2db)(INSTANCE_NAME=testdbs)))" AS SYSDBA

- Review of tablespaces at PDB level shows the USERS and SOE_DATA tablespaces now exist.

- We can see that SOE_DATA tablespace exist in the new PDB DEV2DB. We also see that an SOE_DATA tablespace exist in the PDB DEV1DB.

22. Set DEV2DB tablespace default and storage limits.

$sqlplus /nolog

SQL>connect sys/temp1234@"(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = alpddbs002)(PORT = 1521))(CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = dev2db)(INSTANCE_NAME=testdbs)))" AS SYSDBA

- Set default tablespace

SQL>ALTER PLUGGABLE DATABASE DEFAULT TABLESPACE SOE_DATA;

- Set storage limit for total storage for all tablespaces in PDB DEV2DB to 8G.

SQL>ALTER PLUGGABLE DATABASE STORAGE(MAXSIZE 8G);

- Set temp usage limit to 1G.

SQL>ALTER PLUGGABLE DATABASE STORAGE(MAX_SHARED_TEMP_SIZE 1G);

23. Use SQL Developer to compare schema SOE between source DBTEST1 and target DEV2DB. SQL Developer did not show any differences.

- Everything compare equal.

24. Run the SOE application against both PDB databases.

- Here you can see two SwingBench Order Entry application running. One connected to PDB DEV1DB and the other connected to PDB DEV2DB.

25. Enterprise Manager 12c top activity page at CDB level shows top activity for PDBs.

26. Quick look at AWR report from CDB level shows new column PDB Name. In a different blog post I will break down the multitenant AWR report.

- You can see the IO break down by PDB

- Top SQL statement shows which PDB is executing the top query.

- We can see which PDB has the top physical segment reads.

Conclusion:

Development team 2 now has their own PDB in the CDB database. To the development it is not a shared environment as the PDB database to end user acts the same as a non-CDB database.

Below are my scripts I used in this demo

check_pdbs.sql

set linesize 100

col open_time format a25

--pdbs

select con_id,name,open_mode,open_time,ceil(total_size)/1024/1024 total_size_in_mb from v$pdbs

order by con_id asc;

--active services

col name format a20

col network_name format a20

select con_id,con_name,name,network_name from v$active_services

order by con_id asc;

--show container id

show con_name

check_pdbs_datafiles.sql

set linesize 500

set pagesize 100

col name format a20

col db_file_name format a80

select b.con_id,b.name,a.name as db_file_name from v$datafile a, v$pdbs b

where a.con_id= b.con_id;

check_pdbs_tablespaces.sql

set linesize 500

set pagesize 100

SELECT d.con_id,d.status "Status", d.tablespace_name "Name", d.CONTENTS "Type",

d.extent_management "Extent Management",

TO_CHAR (NVL (a.BYTES / 1024 / 1024, 0), '99,999,990.900') "Size (M)",

TO_CHAR (NVL (a.BYTES - NVL (f.BYTES, 0), 0) / 1024 / 1024,

'99999999.999'

)

|| '/'

|| TO_CHAR (NVL (a.BYTES / 1024 / 1024, 0), '99999999.999') "Used (M)",

TO_CHAR (NVL ((a.BYTES - NVL (f.BYTES, 0)) / a.BYTES * 100, 0),

'990.00'

) "Used %"

FROM SYS.cdb_tablespaces d,

(SELECT con_id,tablespace_name, SUM (BYTES) BYTES

FROM cdb_data_files

GROUP BY con_id,tablespace_name) a,

(SELECT con_id,tablespace_name, SUM (BYTES) BYTES

FROM cdb_free_space

GROUP BY con_id,tablespace_name) f

WHERE d.con_id=a.con_id

and d.con_id=f.con_id

and d.tablespace_name = a.tablespace_name(+)

AND d.tablespace_name = f.tablespace_name(+)

UNION ALL

SELECT d.con_id,d.status "Status", d.tablespace_name "Name", d.CONTENTS "Type",

d.extent_management "Extent Management",

TO_CHAR (NVL (a.BYTES / 1024 / 1024, 0), '99,999,990.900') "Size (M)",

TO_CHAR (NVL (t.BYTES, 0) / 1024 / 1024, '99999999.999')

|| '/'

|| TO_CHAR (NVL (a.BYTES / 1024 / 1024, 0), '99999999.999') "Used (M)",

TO_CHAR (NVL (t.BYTES / a.BYTES * 100, 0), '990.00') "Used %"

FROM SYS.cdb_tablespaces d,

(SELECT con_id,tablespace_name, SUM (BYTES) BYTES

FROM cdb_temp_files

GROUP BY con_id,tablespace_name) a,

(SELECT con_id,tablespace_name, SUM (bytes_cached) BYTES

FROM v$temp_extent_pool

GROUP BY con_id,tablespace_name) t

WHERE d.con_id=a.con_id

AND d.con_id=t.con_id

AND d.tablespace_name = a.tablespace_name(+)

AND d.tablespace_name = t.tablespace_name(+)

order by 1 asc;

Hi Javier,

ReplyDeleteFirst of all thanks for posted. I've little problem in our test system for this case.

In section 18, I took error as on below.

ORA-39342: Internal error - failed to import internal objects tagged with LABEL_SECURITY due to ORA-00955: name is already used by an existing object.

Processing object type DATABASE_EXPORT/PRE_INSTANCE_IMPCALLOUT/MARKER

Processing object type DATABASE_EXPORT/PLUGTS_FULL/PLUGTS_BLK

ORA-39123: Data Pump transportable tablespace job aborted

ORA-19722: datafile /disk2/orabckp/import/EXAMPLE01.DBF_2 is an incorrect version

I try to move Example.dbf and User.dbf. Where is my mistake? I didn't find it...

Thanks....

Seems your database you are importing into already has the LABEL_SECURITY object. These seems to be from example schemas maybe when you created the target database you included the sample schemas. If they do exist you should remove from the target database or exclude from the target export. Did you use the version parameter in the export?

ReplyDeleteHi Javier,

ReplyDeleteI am provisioning from 11gR2 to 12c PDB. I got an error at section 15.

When i try to convert datafiles using RMAN at PDB level i am getting the error RMAN-3009: failure of conversion at target command and ORA-19625: error identifying file /u01/app/dpump/users.259.831595631

But i am able to convert at CDB level.

Please let me know how to convert at PDB level.

Thanks,

Manjunath

Reddy did you create the path location for the pdb database?

ReplyDeleteJavier Thanks for the immediate reply. I am using the default path location which is available while creating the PDB database (Path location is +DATA/RACDB/ECB5DC6ADD28E80BE0430BOFA89BAC4D/DATAFILE/ (users_data_1 is the filename)

ReplyDeleteHi Javier, I even tried after creating the mkdir using ASMCMD as PDB name (PDB3) and tried to convert as +DATA/PDB3/users_data_1 but i am not able to convert it. Its throws the same error.

ReplyDeletePlease assist me how to go ahead.

Does the file /u01/app/dpump/users.259.831595631 exist?

ReplyDeleteJavier,

ReplyDeleteYes the file /u01/app/dpump/users.259.831595631 exists. But if i try to convert the same file by connecting rman to CDB it is converting without errors.

Thanks,

Manjunath

Reddy how are you connecting to the PDB?

ReplyDeleteHi Javier,

ReplyDeleteI am connecting to the PDB as CONNECT TARGET "pdbadm/pdbadm@pdb3 AS SYSBACKUP".

Thanks,

Manjunath

Is the PDB in read write mode?

ReplyDeleteJavier thanks for the reply.

ReplyDeleteYes the PDB is in read write mode.

Reddy I do not see any issue with your steps and i did not see this issue. What is your target and source platform?

ReplyDeletethankx for the reply :)

ReplyDeletePortable Apps

Hello,

ReplyDeleteThe Article on Provision Cross Platform Pluggable give detail information about it.The Article explain with all screen shot and give detail information about it ,thanks for Sharing the information.Xamarin Consultant